Recently, I have put a lot of time into HashiCorp’s Terraform product. It interests me because of its capability to  automatically provision resources across numerous platforms such as vSphere, Azure, AWS, etc. As I branch out my expertise from on-premises infrastructure to public cloud infrastructure, I need a tool to automate new deployments. Terraform checks the boxes to help me achieve these goals. As I dove deeper into Terraform and its enterprise variant, I discovered Sentinel which is a policy framework that HashiCorp built to provide governance across their enterprise products.

automatically provision resources across numerous platforms such as vSphere, Azure, AWS, etc. As I branch out my expertise from on-premises infrastructure to public cloud infrastructure, I need a tool to automate new deployments. Terraform checks the boxes to help me achieve these goals. As I dove deeper into Terraform and its enterprise variant, I discovered Sentinel which is a policy framework that HashiCorp built to provide governance across their enterprise products.

The Need for Policy

Many of us in IT enjoy our full admin access in our environments. However, when we provide a self-service method to our users to begin deploying their own resources, we need policies to keep our users in check. This is especially true when we grant our users access to the public cloud where resources incur hourly or monthly charges. As a result, provisioning resources in the public cloud without guardrails will quickly get out of hand and can rack up a hefty bill. Therefore, it requires some level of governance to exist.

Real-World Use Cases

Sentinel is embedded in many of HashiCorp’s enterprise products. This article will focus on the Terraform Enterprise product. Sentinel is included in the Premium tier of Terraform Enterprise. When Sentinel is used within Terraform Enterprise, it grants a way to limit resource deployment from our Terraform code. Every company has policies in place to ensure standard practices and enforcing limitations on its users, developers, and even the infrastructure team itself. A few examples of the most common governance put into place are:

- Prevent large or unwanted VM/instance sizes

- Standardize on VM/instance images

- Validate the required tags are assigned to resources

- Verify required security is applied to resources

- Deployment of resources into specific regions

Enter Sentinel

Terraform Enterprise can deploy many types of resources across numerous infrastructure providers. In the wrong hands, these deployments can present many problems beyond just a large bill at the end of the month. When Sentinel policies are enforced within Terraform Enterprise, the IT staff can ensure infrastructure provisioning can be tightly controlled. Sentinel policies are small pieces of code written in Hashicorp’s Sentinel policy language. Let’s take a look at how a Sentinel policy is built.

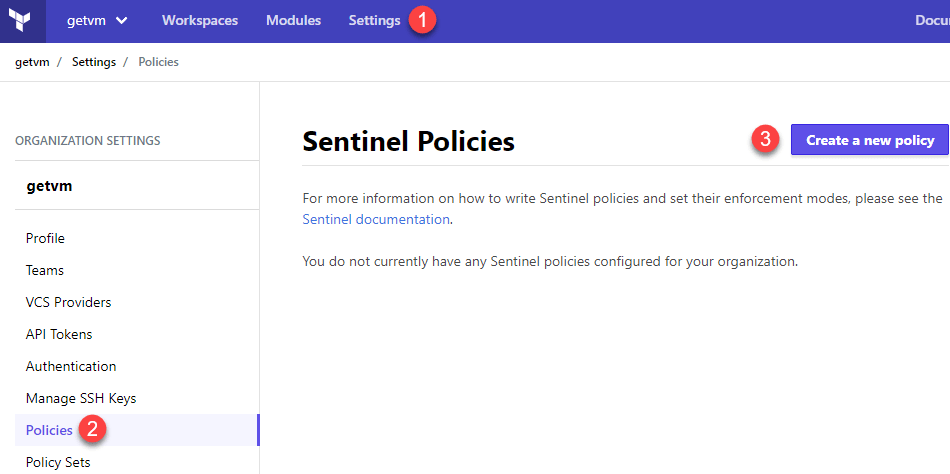

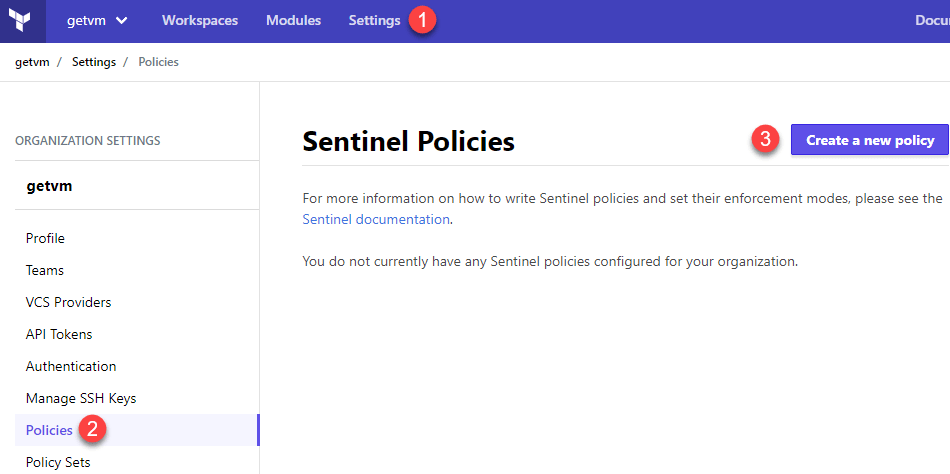

First, log in to your Terraform Enterprise environment. Click Settings in the menu at the top. On the left menu, click Policies. Finally, click Create a New Policy.

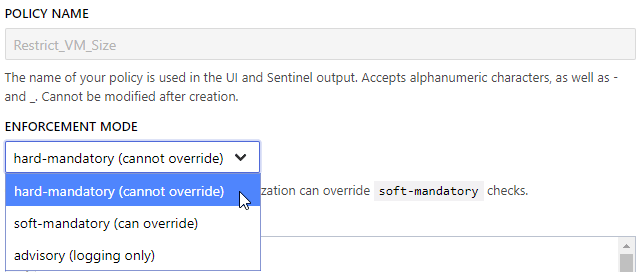

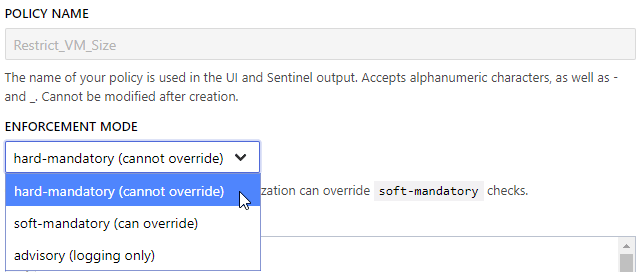

The next screen contains a few items for entry. First, enter a policy name. The next field is the enforcement mode which tells Sentinel how strict it needs to be when enforcing this policy. There are currently three modes to select from:

- Hard-Mandatory – The policy cannot be overridden if the plan is non-compliant.

- Soft-Mandatory – An organization owner may override the policy if the plan is non-compliant.

- Advisory – This is for logging a compliant and non-compliant plan. If the plan is non-compliant, it will continue after it is logged.

More information about the enforcement modes can be found within HashiCorp’s official documentation.

For this example, I’ll leave the enforcement mode to hard-mandatory.

The last section is where we will enter the policy code. This policy will focus on restricting VM sizes for deployments in Azure. Thankfully, HashiCorp has put a lot of effort into providing examples on GitHub for the public to quickly consume. Within HashiCorp’s “terraform-guides” repo, there is a governance folder where multiple policy examples reside. I will take the “restrict-vm-size.sentinel” code and update the VM sizes for my needs. The code I am using for the policy is shown below:

import "tfplan"

get_vms = func() {

vms = []

for tfplan.module_paths as path {

vms += values(tfplan.module(path).resources.azurerm_virtual_machine) else []

}

return vms

}

# comparison is case-sensitive

# so including both cases for "v"

# since we have seen both used

allowed_vm_sizes = [

"Standard_B1S",

"Standard_B1MS",

"Standard_B2S",

"Standard_B2MS",

"Standard_D1_v2",

"Standard_D1_V2",

"Standard_D2_v3",

"Standard_D2_V3",

"Standard_DS1_v2",

"Standard_DS1_V2",

"Standard_DS2_v2",

"Standard_DS2_V2",

"Standard_A1_V2",

"Standard_A1_v2",

"Standard_A2_V2",

"Standard_A2_v2",

"Standard_D1_V2",

"Standard_D1_v2",

"Standard_D2_V2",

"Standard_D2_V2",

]

vms = get_vms()

vm_size_allowed = rule {

all vms as _, instances {

all instances as index, r {

r.applied.vm_size in allowed_vm_sizes

}

}

}

main = rule {

(vm_size_allowed) else true

}

Diving Into The Code

Furthermore, let’s take a look at the code and learn what it is doing, piece by piece. First, it imports a Terraform plugin.

import "tfplan"

The policy is importing the Terraform plan plugin that contains a library, data, and functions that are used when analyzing a Terraform plan. Similarly, there are two additional Terraform plugins that can also be imported. These include “tfconfig” and “tfstate” which contain information to analyze the Terraform config files and state, respectively. To analyzeyour code appropriately, import the necessary plugins at the beginning of each policy. More information about the importable plugins for Terraform is found here.

The next section is a function that scans the Terraform plan and returns all of the Azure Virtual Machine types into an array.

get_vms = func() {

vms = []

for tfplan.module_paths as path {

vms += values(tfplan.module(path).resources.azurerm_virtual_machine) else []

}

return vms

}

The next section creates a new array named “allowed_vm_sizes” and assigns the VM sizes I want to limit to when deploying to Azure. As shown below, the original author of the code commented that VM sizes had been seen with both capital and lowercase ‘V’ in the name. Therefore, you’ll notice that there are duplicate VM sizes with capital and lowercase ‘V’.

# comparison is case-sensitive

# so including both cases for "v"

# since we have seen both used

allowed_vm_sizes = [

"Standard_B1S",

"Standard_B1MS",

"Standard_B2S",

"Standard_B2MS",

"Standard_D1_v2",

"Standard_D1_V2",

"Standard_D2_v3",

"Standard_D2_V3",

"Standard_DS1_v2",

"Standard_DS1_V2",

"Standard_DS2_v2",

"Standard_DS2_V2",

"Standard_A1_V2",

"Standard_A1_v2",

"Standard_A2_V2",

"Standard_A2_v2",

"Standard_D1_V2",

"Standard_D1_v2",

"Standard_D2_V2",

"Standard_D2_V2",

]

The next section compares each of the {vm}.vm_size value in the “vms” array to the VM sizes in the “allowed_vm_sizes” array. It returns a boolean value to the variable “vm_size_allowed” after the comparison completes.

vms = get_vms()

vm_size_allowed = rule {

all vms as _, instances {

all instances as index, r {

r.applied.vm_size in allowed_vm_sizes

}

}

}

The last section checks the “vm_size_allowed” value to see if it is true and returns to “main” to complete the check.

main = rule {

(vm_size_allowed) else true

}

After a policy has been created, its rules are enforced across all workspaces in the Terraform Enterprise environment. Since these policies can be very restrictive, it is best to first set them to an advisory level and monitor the logs to ensure they are performing as expected. Once you’re satisfied with the results of the policy, increase the enforcement level.

Let’s See It In Action

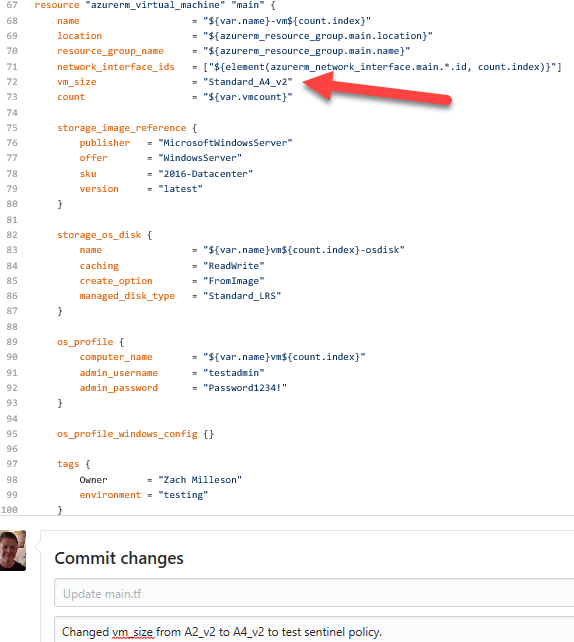

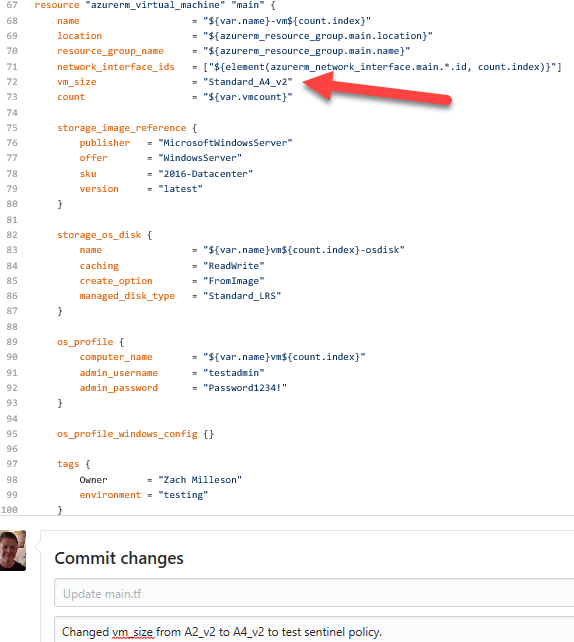

I already have a plan in my Terraform Enterprise environment that I can test against. To test, I made a change to the vm_size in my code repo which will make the plan non-compliant for the new policy.

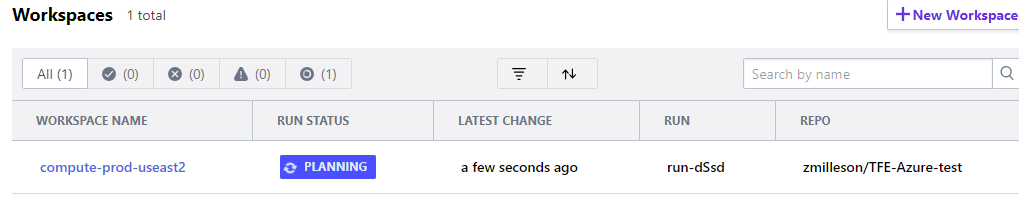

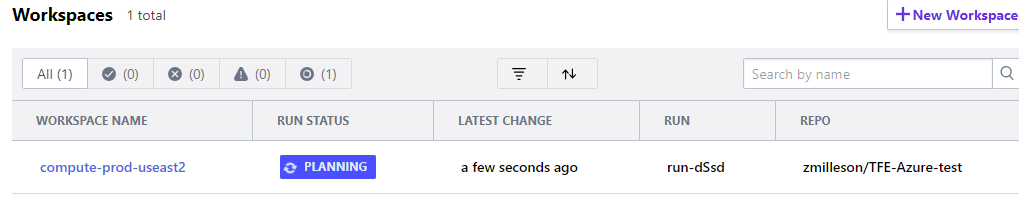

Once I commit the change, the webhook will automatically update my plan with the change and will attempt to queue a new plan.

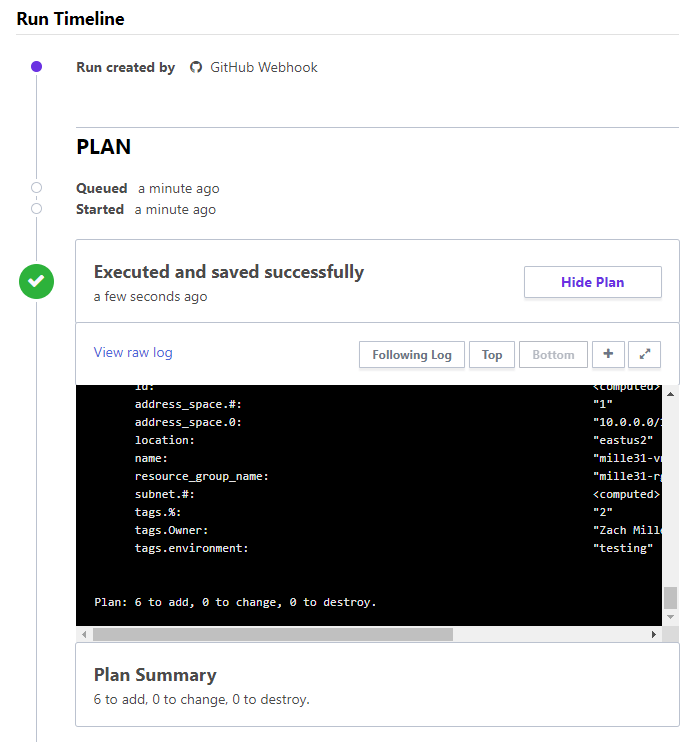

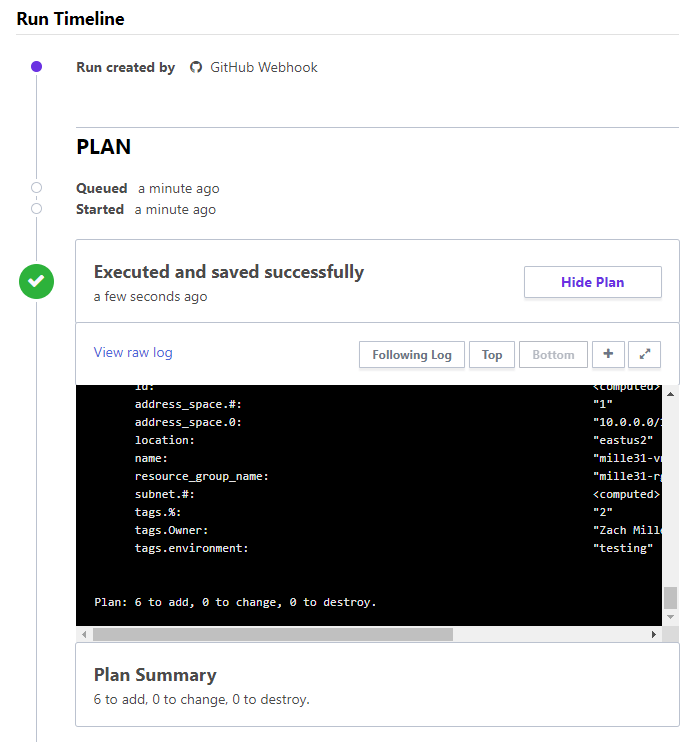

Let’s take a look at the plan that was initiated and its summary.

Let’s take a look at the plan that was initiated and its summary.

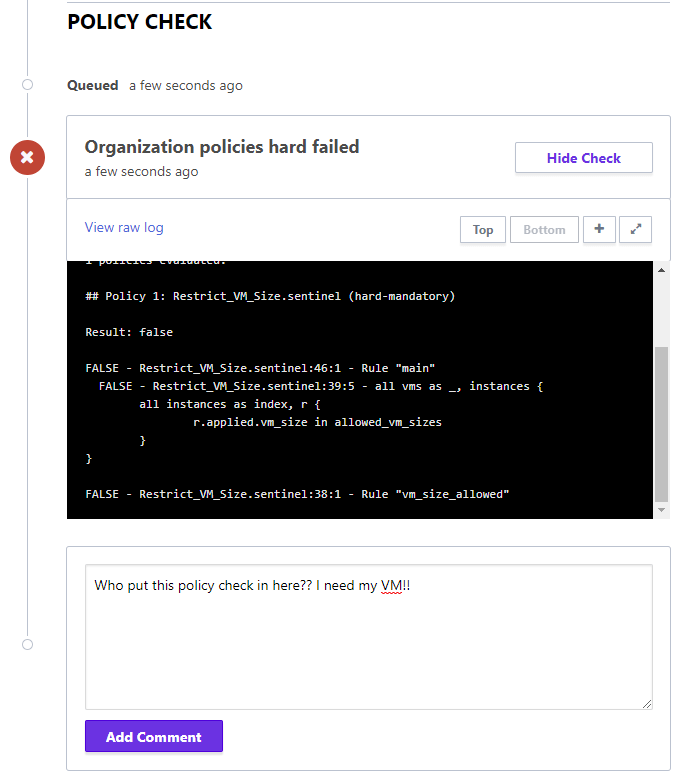

So far, so good. The plan was initiated and successfully ran! Now we can scroll down a bit and see the policy check.

So far, so good. The plan was initiated and successfully ran! Now we can scroll down a bit and see the policy check.

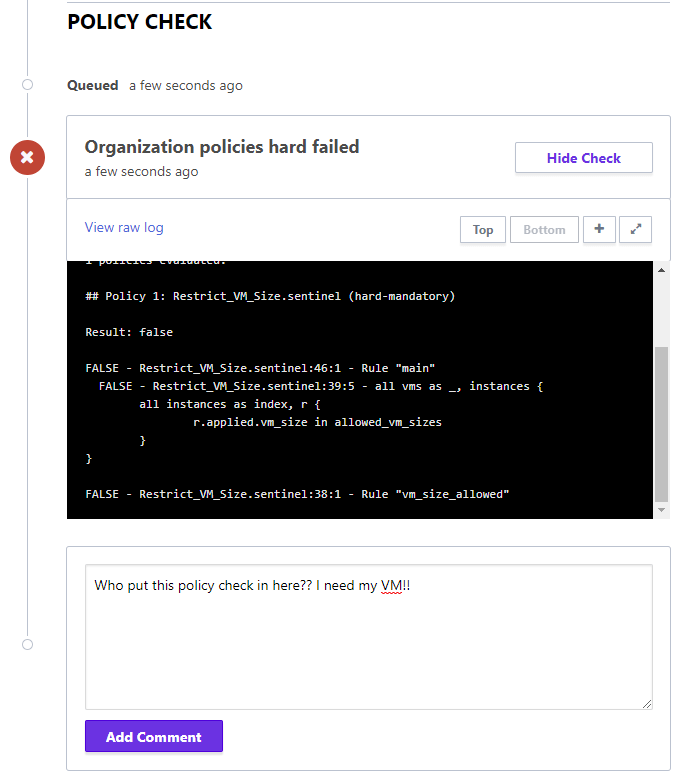

Finally, we see the policy enforcing its policy and prevents a Terraform apply from occurring as the plan was non-compliant with the new Sentinel policy.

Finally, we see the policy enforcing its policy and prevents a Terraform apply from occurring as the plan was non-compliant with the new Sentinel policy.

Extra Resources

Certainly, there are numerous policies that can be built and tailored for your environment. Below is a list of resources that will be helpful to discover more about Sentinel.

provided for these various Terraform features is helpful. The official Terraform documentation describing these items can be a bit sparse on examples and info.

provided for these various Terraform features is helpful. The official Terraform documentation describing these items can be a bit sparse on examples and info.

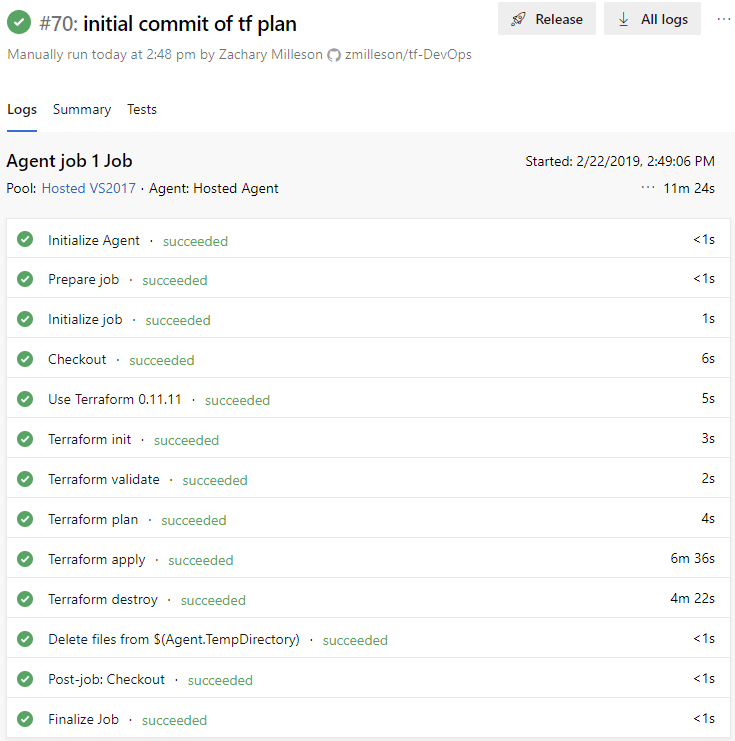

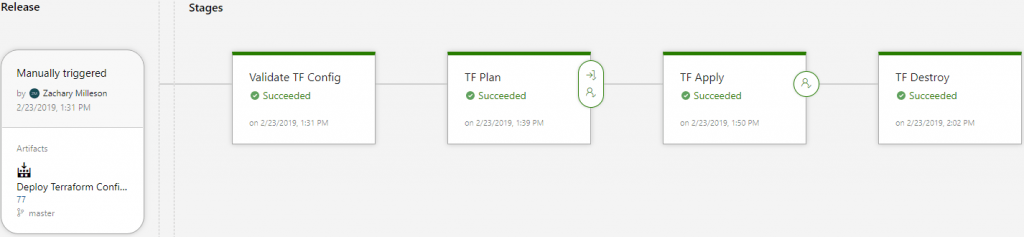

Let’s take a look at the plan that was initiated and its summary.

Let’s take a look at the plan that was initiated and its summary. So far, so good. The plan was initiated and successfully ran! Now we can scroll down a bit and see the policy check.

So far, so good. The plan was initiated and successfully ran! Now we can scroll down a bit and see the policy check. Finally, we see the policy enforcing its policy and prevents a Terraform apply from occurring as the plan was non-compliant with the new Sentinel policy.

Finally, we see the policy enforcing its policy and prevents a Terraform apply from occurring as the plan was non-compliant with the new Sentinel policy.

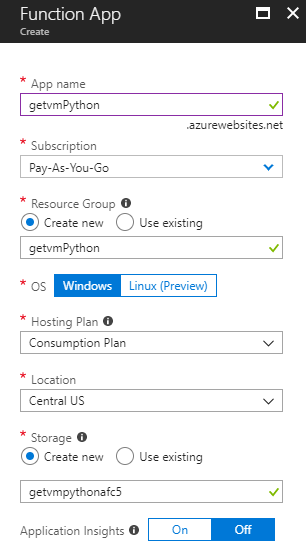

They showed many of the differences and advancements they have made from version 1.0 to 2.0. Azure Functions 2.0 is a big step in the right direction for all but before moving from 1.0 to 2.0, users need to check out if their function app will port directly over. Also, Functions on Linux with the consumption model is now in preview mode!

They showed many of the differences and advancements they have made from version 1.0 to 2.0. Azure Functions 2.0 is a big step in the right direction for all but before moving from 1.0 to 2.0, users need to check out if their function app will port directly over. Also, Functions on Linux with the consumption model is now in preview mode! Before this session, I had not been exposed too much to the security and governance side of things. It is a very important aspect of architecting an Azure solutions in my current position. The new Azure Blueprints feature was discussed briefly. Blueprints looks like it will be a very powerful tool for numerous use cases. This session has encouraged me to dive deeper into the subject.

Before this session, I had not been exposed too much to the security and governance side of things. It is a very important aspect of architecting an Azure solutions in my current position. The new Azure Blueprints feature was discussed briefly. Blueprints looks like it will be a very powerful tool for numerous use cases. This session has encouraged me to dive deeper into the subject.

I watched two sessions from the hang area where I had originally planned to attend in person. One was because the session room was full. The other instance was of convenience when I was already watching another session and saw my next session was slated to be shown on the screen directly in front of me. The turnaround time of getting session videos online was impressive.

I watched two sessions from the hang area where I had originally planned to attend in person. One was because the session room was full. The other instance was of convenience when I was already watching another session and saw my next session was slated to be shown on the screen directly in front of me. The turnaround time of getting session videos online was impressive.

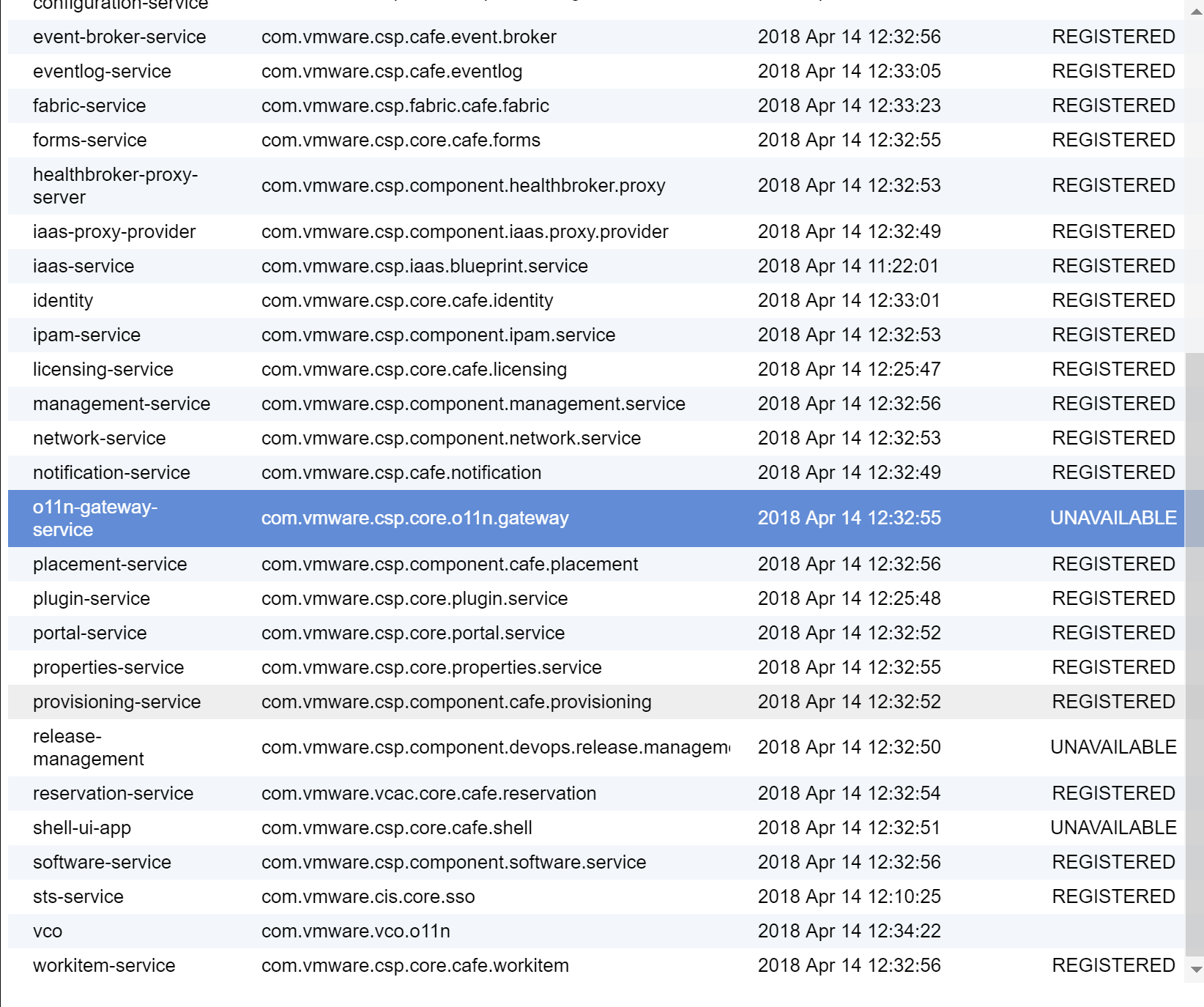

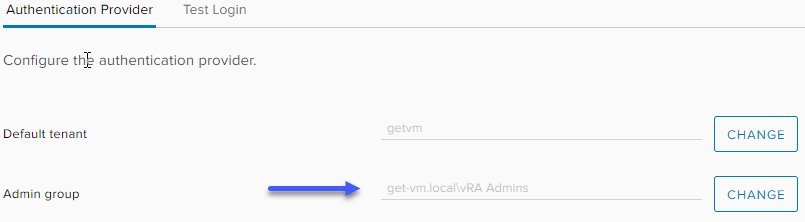

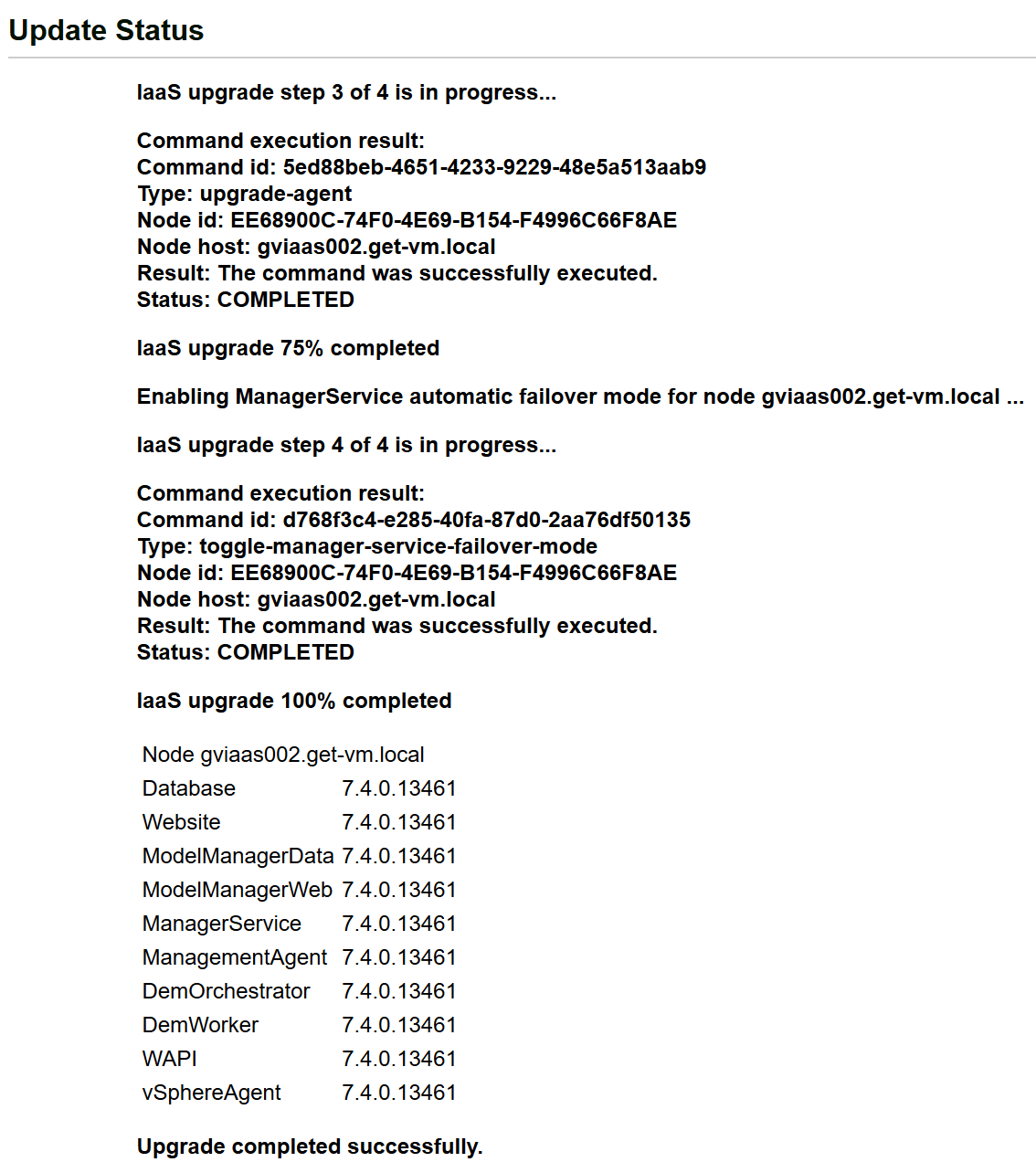

I planned to skip past 7.3.1 and go directly to 7.4. I downloaded the vRA 7.4 ISO file, attached it to the appliance’s CD-ROM drive and clicked check updates from the

I planned to skip past 7.3.1 and go directly to 7.4. I downloaded the vRA 7.4 ISO file, attached it to the appliance’s CD-ROM drive and clicked check updates from the

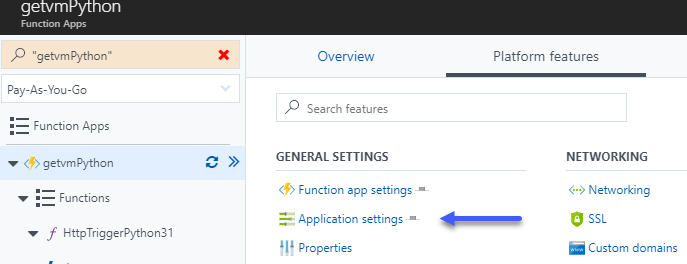

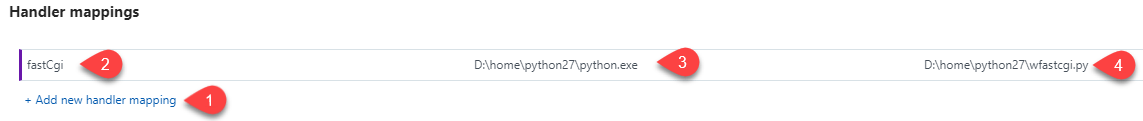

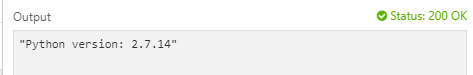

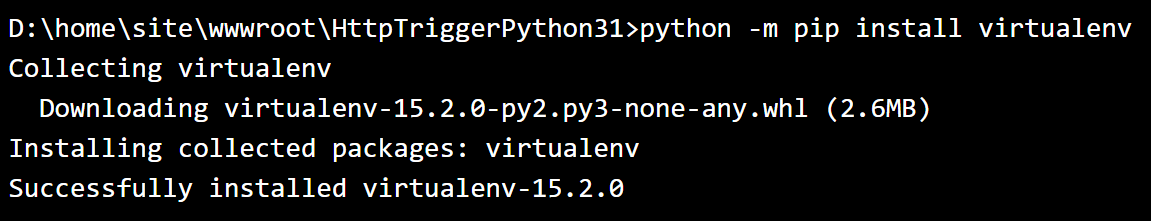

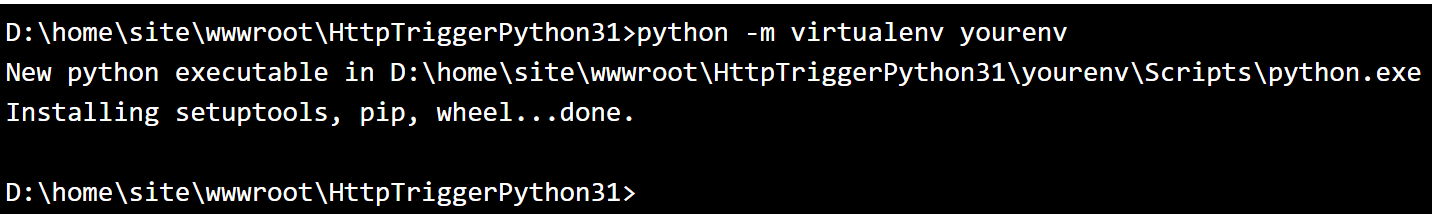

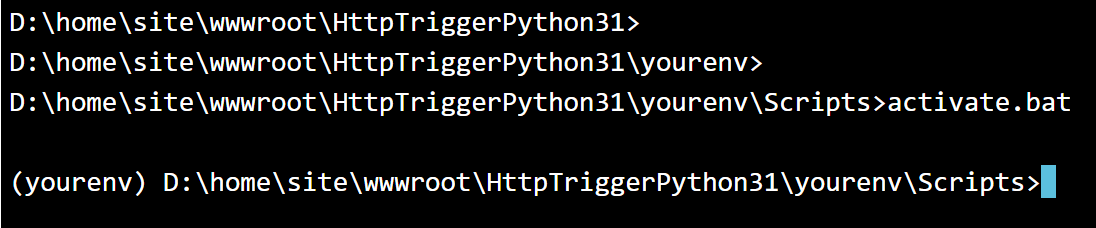

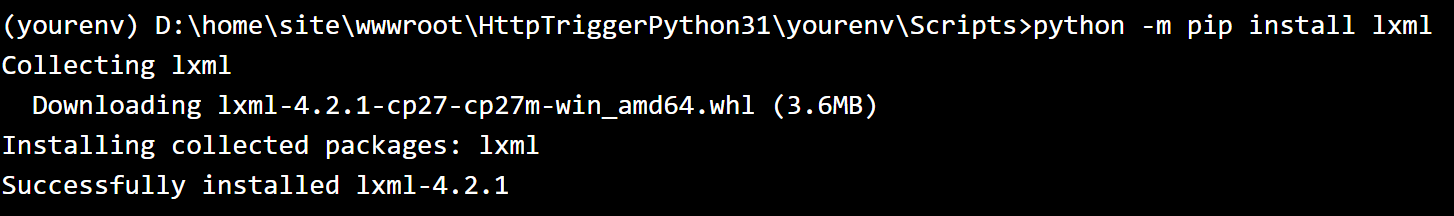

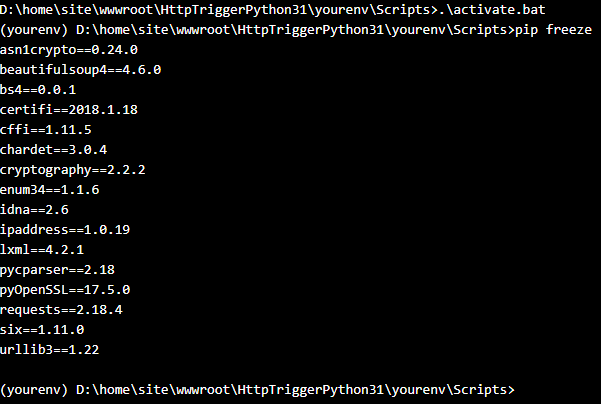

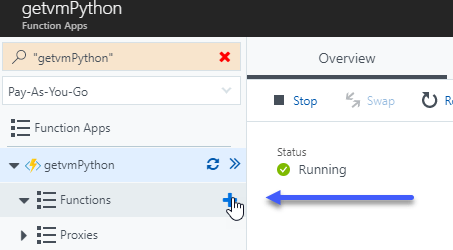

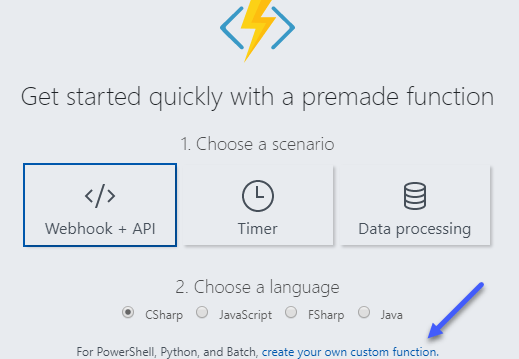

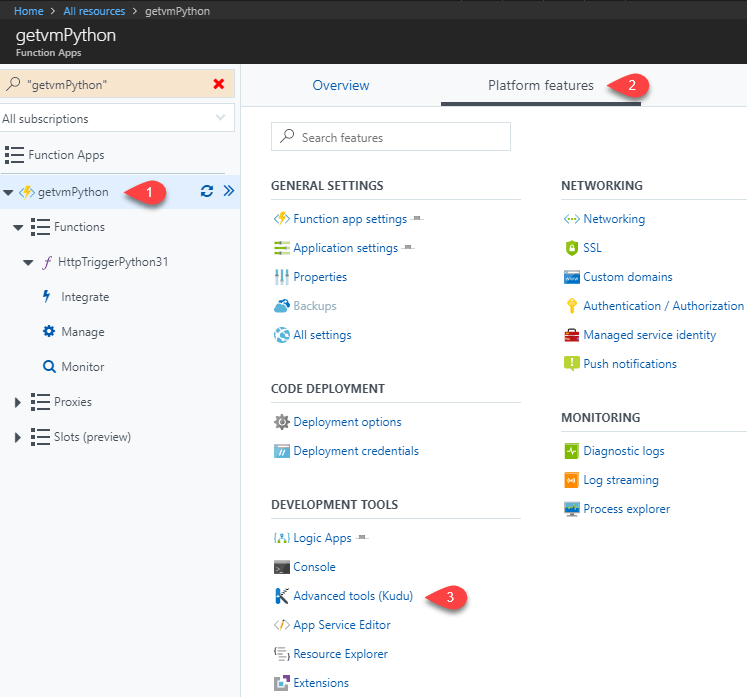

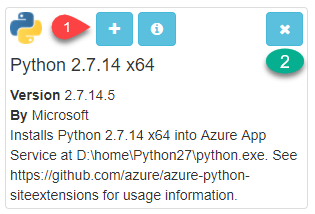

Now that our desired version of Python has been installed, the Handler Mappings need to be updated. Go back to the Function App’s Platform Features page. Then select “Application settings.”

Now that our desired version of Python has been installed, the Handler Mappings need to be updated. Go back to the Function App’s Platform Features page. Then select “Application settings.”